I. Introduction

II. Methods

2.1 Subjects

2.2 Stimuli

2.3 Procedure

2.4 Statistical analysis

III. Results

IV. Discussion

V. Conclusions

I. Introduction

Numerous studies have explored how speech sounds are processed and integrated into linguistic comprehension. Speech perception relies on the mapped representation of acoustic input onto the phonological categories.[1] A key mechanism underlying this process is categorical perception, a well-recognized cognitive phenomenon where sensory input is interpreted as discrete abstract categories rather than the exact physical form. Categorical perception of speech allows listeners to distinguish sound more accurately when they belong to different phonemic categories than when they are variations within the same category, even if their physical differences are identical.[2] Consequently, listeners can ignore irrelevant acoustic variation within phonemic categories and focus on the significant differences between categories.

Speech perception entails recognizing words composed of sequential speech sounds, often aided by semantic cues under adverse listening conditions. The ‘Ganong effect’ exemplifies this phenomenon, demonstrating that phoneme categorization boundaries shift based on the lexical status of the context.[3] Borsky et al.[4]investigated this effect by constructing a ten-step continuum of /g/-/k/, manipulating Voice Onset Time (VOT). They embedded these stimuli within the sentences containing real word (e.g. ‘goat’ vs ‘coat’) or non-word (e.g. ‘goke’ vs ‘coke’). Participants judged whether the auditory stimuli matched visually displayed words. The results indicated a significant lexical bias for ambiguous sounds in real word context whereas this effect was reduced in nonword context. Additional evidence was reported from an experiment using a /ba/–/pa/ continuum based on VOT as stimuli.[5]Their findings demonstrated high response accuracy near the phonemic boundary and a strong lexical bias favoring real words (e.g. ‘bat’ vs ‘pat). In contrast, nonword context (e.g. ‘baf’ vs ‘paf’) showed weaker lexical effects, supporting the influence of lexical knowledge on phoneme categorization.

Despite robust behavioral evidence supporting the Ganong effect, its precise neural mechanism remain elusive. Event-Related Potentials (ERPs), derived from Electroencephalogram (EEG) recordings, provide time-locked measures reflecting brain activity associated with sensory, cognitive, or motor events. Among ERP components, P300 has become a critical marker of high-level cognitive processes such as stimulus evaluation, categorization, and working memory,[6] following its initial identification by Sutton et al.[7] in 1965. The P300 component can be elicited by various stimuli, including the auditory, visual, olfactory, somatosensory, and multimodal inputs.[6] In speech and hearing research, it is widely regarded as an index essential for speech processing. P300 is sensitive to phoneme perception, reflecting the brain’s ability to categorize speech sounds into meaningful linguistic units[8] Notably, larger P300 amplitude is elicited when stimuli cross phonetic category boundaries compared to within-category contrasts, indicating its representation of phonetic processing.[9] Furthermore, P300 are observed in response to acoustic changes such as variations in frequency, intensity, and duration, highlighting its sensitivity to low-level acoustic or subphonetic processing. [10,11]

The Ganong effect provides critical insight into the mechanisms of speech perception. Often cited as evidence for top-down processing in speech perception models, this phenomenon can be interpreted within a bottom-up framework as reflecting higher-order decision-making processes or within a top-down framework through interactive feedback mechanisms. The autonomous model posits a strictly bottom-up architecture, in which lower-level acoustic-phonetic processes transmit information unidirectionally to higher-level lexical representations, without reciprocal influence. In contrast, the interactive model incorporates top-down processing, allowing higher-level lexical or semantic knowledge to exert modulatory effects on lower-level perceptual processes via feedback mechanisms.[12,13,14,15]

The present study aims to investigate whether P300 and behavioral responses reflect the Ganong effect within an active oddball paradigm. By integrating electrophysiological and behavioral measures in response to cross-category speech stimuli presented in lexical contexts, this study seeks to elucidate whether lexical influences modulate speech processing at a pre-lexical stage. If P300 and behavioral responses are sensitive to the lexical influence, the /pi/ context is expected to elicit larger P300 amplitudes and faster reaction times, possibly with shorter latencies and higher response accuracy compared to the /bi/ context. Given the P300’s association with attentive processing and stimulus evaluation at pre-lexical stages, the results will be interpreted within the framework of competing models of speech perception.[16,17]

II. Methods

This study was approved by University of Tennessee Health Science Center Institutional Review Board (# 11-01609-XP). Participants signed informed consent statements, filled out a case history form, and received a screening test. The sample size was determined prior to the experiment based on a power analysis. Using stimuli containing seven-step /bi/-/pi/ continuum, electrophysiological and behavioral responses were obtained simultaneously for statistical analysis.[17]

2.1 Subjects

Participants were 21 right-handed (Oldfield, 1971) English speakers (male 5, female 16) aged from 18 to 28 years old (mean = 22.3) with no history of neurological, psychiatric disorders nor speech, language, hearing, or learning disorders. Their audiometric thresholds were at or below 15 dB HL between 250 Hz and 8,000 Hz.

2.2 Stimuli

Stimuli were created by recording a male native speaker of English producing /bi/, /stiŋ/ (for the /bi/ context), and /sup/ (for the /pi/ context) with consistent pitch, loudness, and duration in a sound attenuating chamber. Recording was done, using a high quality microphone (Spher-O-Dyne), pre-amplified (Tucker-Davis, Model MA2), digitized (16-bit A/D converter, Tucker-Davis DD1), and sampled at 44.1 kHz (CSRE, version 4.5). Individual tokens were edited, normalized for uniformc intensity (Adobe Audition, Version 1.5), and had durations of 355 ms (/bi/), 468 ms (/stiŋ/), and 406 ms (/sup/).

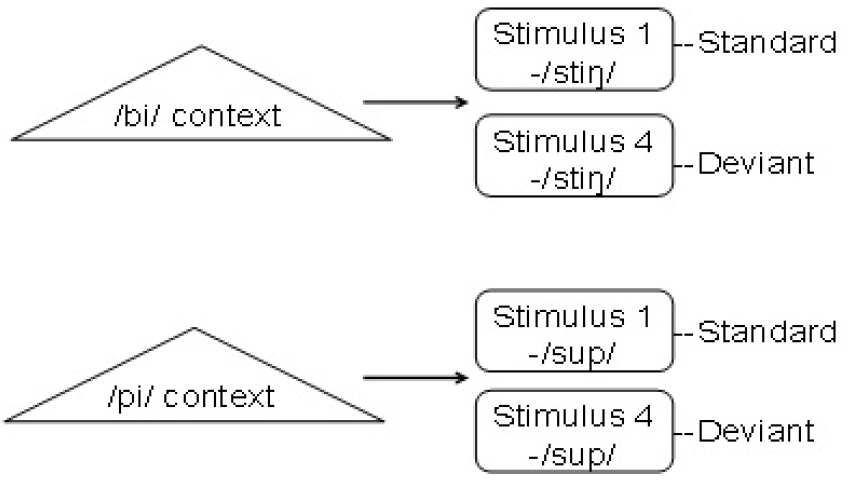

A seven-step /bi/-/pi/ continuum was generated by manipulating VOT systematically. The burst and aspiration portion of synthetic /pi/ were digitally inserted before voicing portion of natural /bi/ by 8 ms, constructing the prototypical /bi/ with VOT of 8 ms (Stimulus 1) and the prototypical /pi/ with VOT of 56 ms (Stimulus 7) (Adobe Audition, Version 1.5). To determine the stimuli for the oddball paradigm, these stimuli were embedded in /bi/ (‘bee sting’) and /pi/ (‘pea soup’) contexts for the two-forced choice labeling test where participants listened to stimuli approximately at 75 dB SPL through headphones and instructed to click the mouse on the letter corresponding to what they heard. Each stimulus was presented ten times in random order, yielding 140 responses (7 steps × 2 lexical contexts × 10 times). Based on the results of a two way repeated measures ANOVA conducted on the arcsine transformed /bi/ response, with lexical context and VOT as factors, Stimulus 1 (within-category, /bi/) and Stimulus 4 (cross-category, /?/) which showed the largest lexical effect were selected as stimuli for the oddball paradigm. These stimuli were embedded within lexical contexts of /bi/ (‘bee sting’) and /pi/ (‘pea soup’), serving as standard and deviant stimuli, respectively.

2.3 Procedure

The stimuli were presented binaurally, using Etymotic ER-3A insert earphones approximately at 75 dB SPL. All data were collected with NeuroScan hardware and software. Participants were seated comfortably in a dark sound-treated booth and connected to the Compumedics 64-channel NeuroScan system, and instructed to press a response button as soon as possible upon detecting a deviant stimuli within a frequent /bi/ sound stream in both /bi/ and /pi/ contexts.

For the electrophysiological and discrimination tests, a total of 450 stimuli composed of 369 standard stimuli (81 %) and 81 deviant stimuli (19 %) were presented in the active oddball paradigm for each lexical context. The stimuli were divided into three blocks of 150 stimuli, each containing 123 standard stimuli (81 %) and 27 deviant stimuli (19 %). The sequence of block presentation was counterbalanced across participants. Also, within each block, stimuli were presented in pseudorandom sequences with at least 8 standard stimuli preceding the first deviant and no less than 3 standard stimuli after one deviant. A 1,700 ms Inter-Stimulus Interval (ISI) was used to allow sufficient time for the behavioral response. Fig. 1 illustrates the stimuli used for an oddball paradigm in the /bi/ and /pi/ contexts.

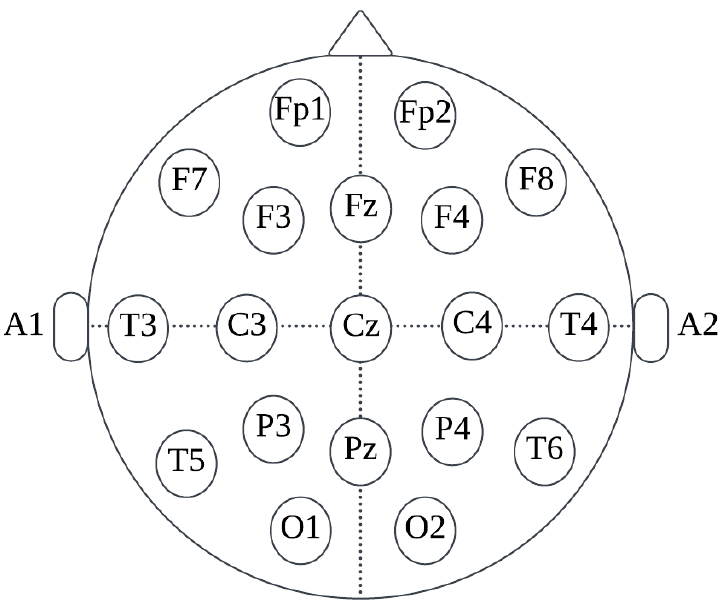

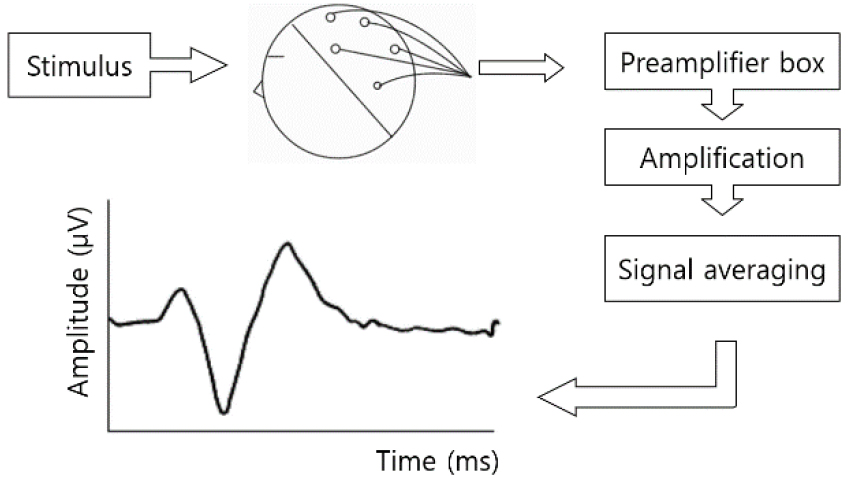

EEG activity was recorded while participants were responding behaviorally with electrodes placed on F3, Fz, F4, C3, Cz, C4, P3, Pz, and P4 (re: International 10-20 System.[18] See Fig. 2.), referenced at the electrode between Cz and CPz, and grounded at the electrode between Fz and FPz. Vertical and horizontal Electrooculograms (EOGs) were recorded by electrodes placed above, below, and on the inner and outer canthi of the left eye. All electrodes were Ag/AgCl, and impedance was maintained below 5kΩ. The continuous EEG recordings were obtained using a bandpass of 0.01 Hz ~ 30 Hz, digitally sampled at 500 Hz for 100 ms before and 1,000 ms after the stimulus onset. Fig. 3 depicts the schematic diagram of ERP recording.

2.4 Statistical analysis

For electrophysiological data, all post-stimulus data (0 ms ~ 1,000 ms) were prestimulus baseline (-100 ms ~ 0 ms)-adjusted. Amplitude and latency of P300 were measured on the deviant waveform. The most negative peak was scored for the P300 between 320 and 500 ms, based on the previous research[9,19,20] and the visual inspection of the group grand average waveform. Two separate repeated-measures ANOVAs were conducted on P300 amplitude and P300 latency with lexical context (/bi/ context, /pi/ context) and scalp distribution (frontal, central, parietal sites) as factors, respectively. Bonferroni corrections were used such that an alpha level of p < .05 was considered.

For discrimination data, response accuracy was analyzed on the arcsine transformed value of the percentage of correct responses while reaction times were analyzed on the time between the triggering point of the stimuli and the moment listeners pressed the response button. Two repeated-measures ANOVAs were conducted on the response accuracy and reaction times with lexical context (/bi/ context, /pi/ context) as the factor, respectively. Bonferroni corrections were used such that an alpha level of p < .05 was considered.

III. Results

The results include electrophysiological data (P300 amplitude, P300 latency), behavioral data (response accuracy, reaction times), and correlation between them.[17]

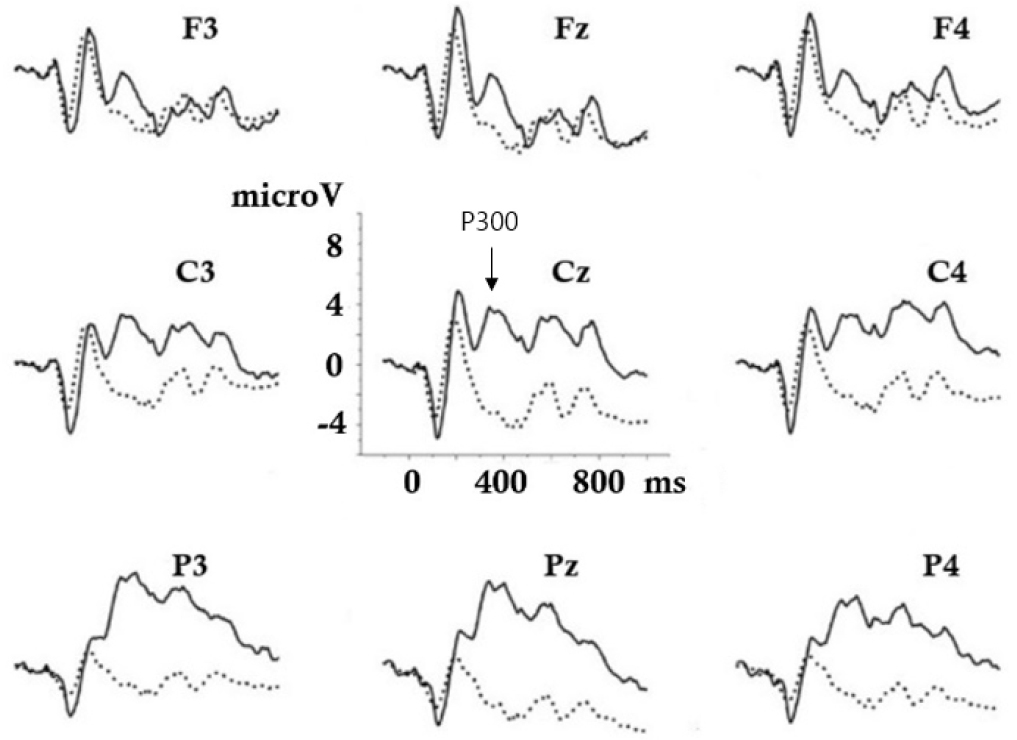

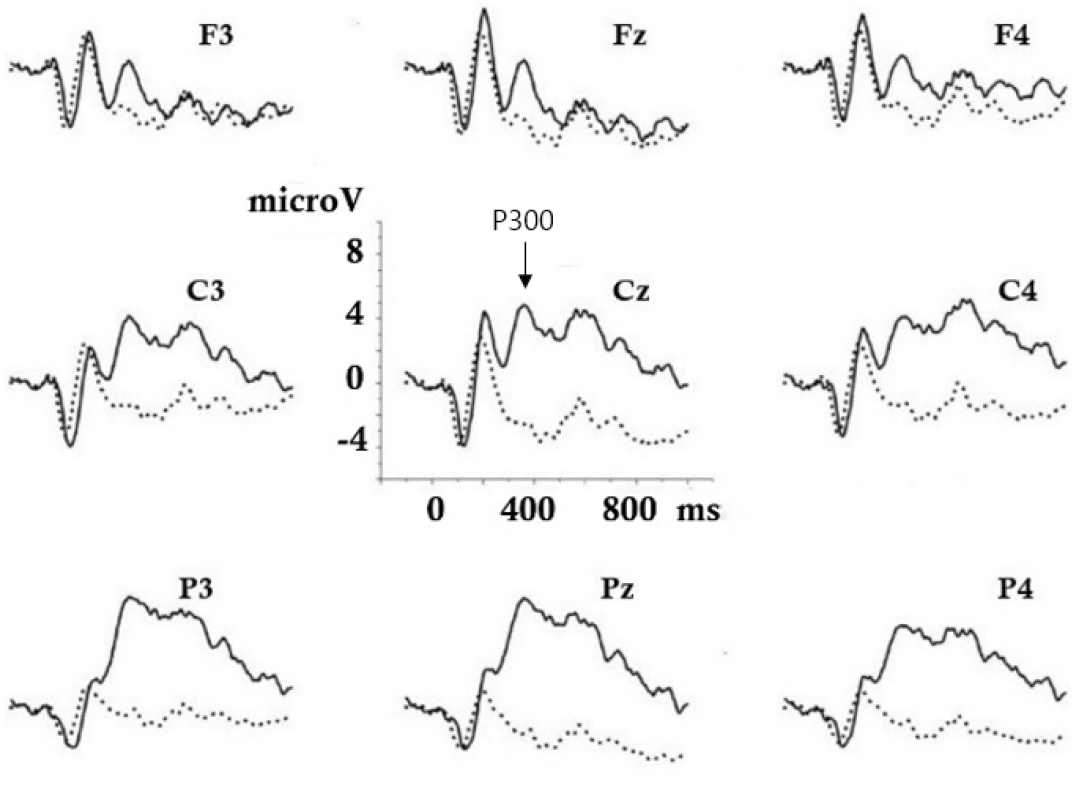

A robust P300 response was elicited by both lexical contexts at all electrodes. Figs. 4 and 5 show the grand average waveforms in the /bi/ and /pi/ contexts, respectively. P300 amplitude differed significantly across the scalp (F1.292, 25.837 = 60.750, p < .001), being largest at parietal sites, followed by central, and smallest at frontal sites. However, neither the main effect of lexical context nor its interaction with scalp distribution showed statistical significance. The results of P300 amplitude across scalp distributions are summarized in Tables 1 and 2. There were no significant effects of lexical context, scalp distribution or their interaction on P300 latency.

Table 1.

Mean and Standard Deviation (SD) of P300 amplitude across scalp distributions.

| Scalp distribution | Mean | SD | |

| /bi/ context | Frontal | 1.29 | 3.06 |

| Central | 4.92 | 3.42 | |

| Parietal | 7.13 | 3.40 | |

| /pi/ context | Frontal | 1.56 | 3.86 |

| Central | 5.21 | 3.87 | |

| Parietal | 7.55 | 3.55 |

Table 2.

p-values of P300 amplitude across scalp distributions.

| Contrast pair | p-value |

| Frontal vs Central | <.001** |

| Central vs Parietal | <.001** |

| Frontal vs Parietal | <.001** |

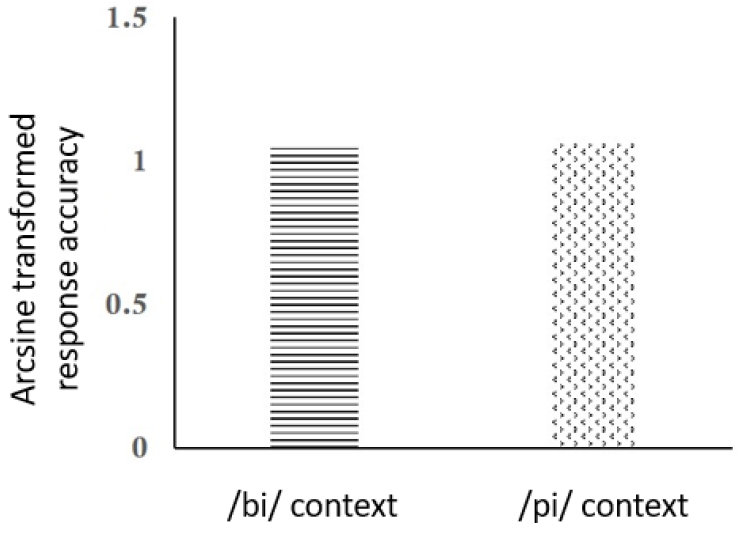

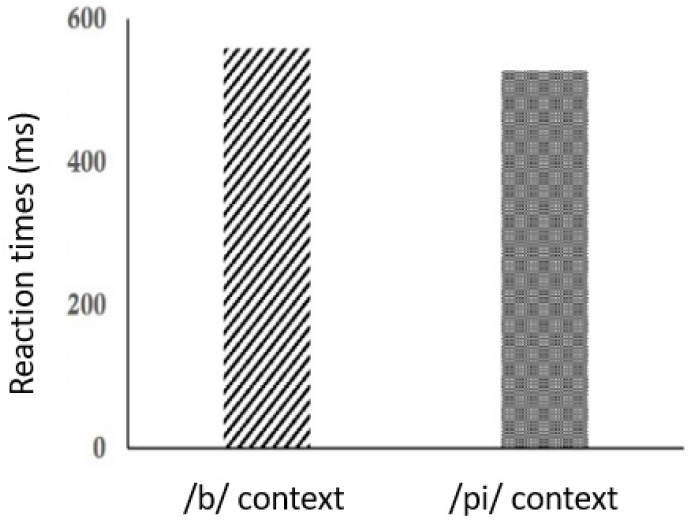

Response accuracy did not significantly differ across lexical contexts (F1, 20 = 1.701, p = .207) while reaction times were significantly faster in the /pi/ context than /bi/ context. (F1, 20 = 4.946, p = .038). Tables 3 and 4, along with Figs. 6 and 7, show the results of response accuracy and reaction times across lexical contexts.

Table 3.

Mean and SD of behavioral responses across lexical contexts.

| Measure | Context | Mean | SD |

| Response accuracy | /bi/ | 1.05 | 0.09 |

| /pi/ | 1.06 | 0.08 | |

| Reaction times | /bi/ | 558.18 | 145.78 |

| /pi/ | 527.96 | 115.58 |

Table 4.

p-values of behavioral responses across lexical contexts.

| Measure | Contrast pair | p-value |

| Response accuracy | /bi/ vs /pi/ | .207 |

| Reaction times | /bi/ vs /pi/ | .038* |

There was no correlation between P300 (amplitude, latency) and behavioral responses (response accuracy, reaction times).

IV. Discussion

Behavioral data revealed significantly faster reaction times in the /pi/ context than the /bi/ context, while response accuracy remained comparable across lexical contexts. Faster reaction times suggest decreased cognitive processing demands, reflecting the influence of lexical effects, whereby ambiguous deviant stimuli were perceived more distinctly relative to the standard stimuli in the /pi/ context than the /bi/ context. In contrast, response accuracy was unaffected by lexical influences. These findings align with previous studies,[21,22] demonstrating that lexical context facilitates speech processing by accelerating perceptual decisions, thereby making reaction times a more sensitive indicator of speech processing than response accuracy. Reaction times are particularly responsive to subtle lexical effects in speech perception, reflecting the efficacy and speed of cognitive processing rather than being limited to binary accuracy outcomes.[17,23]

Electrophysiological findings reveals that P300 was clearly elicited with more positive voltage by the deviant stimuli than by the standard stimuli in both lexical contexts. It is well established that the P300 amplitude is highly sensitive to stimulus probability. P300 amplitude inversely correlates with stimulus probability, with less frequent stimuli eliciting larger amplitudes. This relationship arises because rare events capture more attention and require more cognitive resources to process unexpected events.[24] As expected, the current findings showed that P300 amplitude was most prominent at posterior sites, followed by central, and least pronounced at frontal sites, while P300 latency was stable across the scalp. Previous studies have consistently reported that P300 amplitude varies across the scalp, with the largest amplitudes over centroparietal sites, reflecting the activation of posterior cortical regions involving attention and memory processes. In contrast, P300 latency remains stable, highlighting its robustness as an index of stimulus evaluation timing.[17,25,26]

Interestingly, although the behavioral data clearly demonstrated a lexical effect on the categorical perception of speech, P300 amplitude was unaffected by lexical context. No significant correlation was found between P300 and behavioral responses. Given that P300 reflects early attentional processing related to acoustic, phonetic, and phonological features[8,9,10,11] it may be insensitive to lexical influences on categorical perception despite clear behavioral evidence. These findings suggest that lexical influences on speech perception arise at post-perceptual stages beyond the pre-lexical processing reflected by P300. This supports hierarchical models, where acoustic-phonetic processing operates independently of lexical-semantic integration. The behavioral lexical effect on speech perception may result from later reinterpretation, with lexical context exerting influence at higher stages possibly indexed by the N400 or even during higher-order decision-making processes.[12,17,27]

The lack of P300 sensitivity to lexical effects could be attributed to the nature of the discrimination task, even though the P300 might reflect the Ganong effect. A recent study reported that ERPs revealed lexical biasing within 200 ms during a labeling task, providing neural evidence in support of the Ganong effect.[28] The discrimination task may have emphasized acoustic properties and limited access to lexical representation, which typically emerge through dynamic interaction between pre-lexical and lexical information in natural listening environments. For this reason, however, discrimination task could offer valuable insights into whether speech perception is inherently modulated by lexical context, providing a framework to disentangle the complex interplay between perceptual and post-perceptual processes.

The limitations of the current study may involve speech stimuli and lexical context used, which may not have been fully optimized to capture the subtle lexical effects. Though Stimulus 4 and lexical contexts were chosen based on prior labling performance to enhance lexical effects, they may still have introduced potential confounding factors. Future research would benefit from more controlled designs and stimulus selection to further validate and refine the lexical effect on speech perception.

V. Conclusions

The current findings suggest speech perception at the pre-lexical level remains unaffected by lexical influences, implying that the integration of lexical context likely occurs at post-perceptual stages beyond the processing indexed by the P300 component. These results lend support to the autonomous model of speech perception. While electrophysiological data did not reflect lexical effects, behavioral data, specifically reaction times, demonstrated sensitivity to lexical context, highlighting the intricate nature of speech perception. Future research employing ERP components such as the N400 or higher-order decision-related potentials is needed to verify these interpretations and further elucidate the neural mechanisms underlying lexical integration in speech perception.